Sometimes in software it can feel that you take two steps forward and one step back. One example of this is with BEx-based Explorer Information Spaces when migrating from XI 3.1 to BO 4.0. In 4.0, SAP removed the ability to create information spaces against the legacy universe (unv) and instead required everyone to use […]

Category Archives: BI Platform

ENTICE Users with Interactive Dashboards that work…

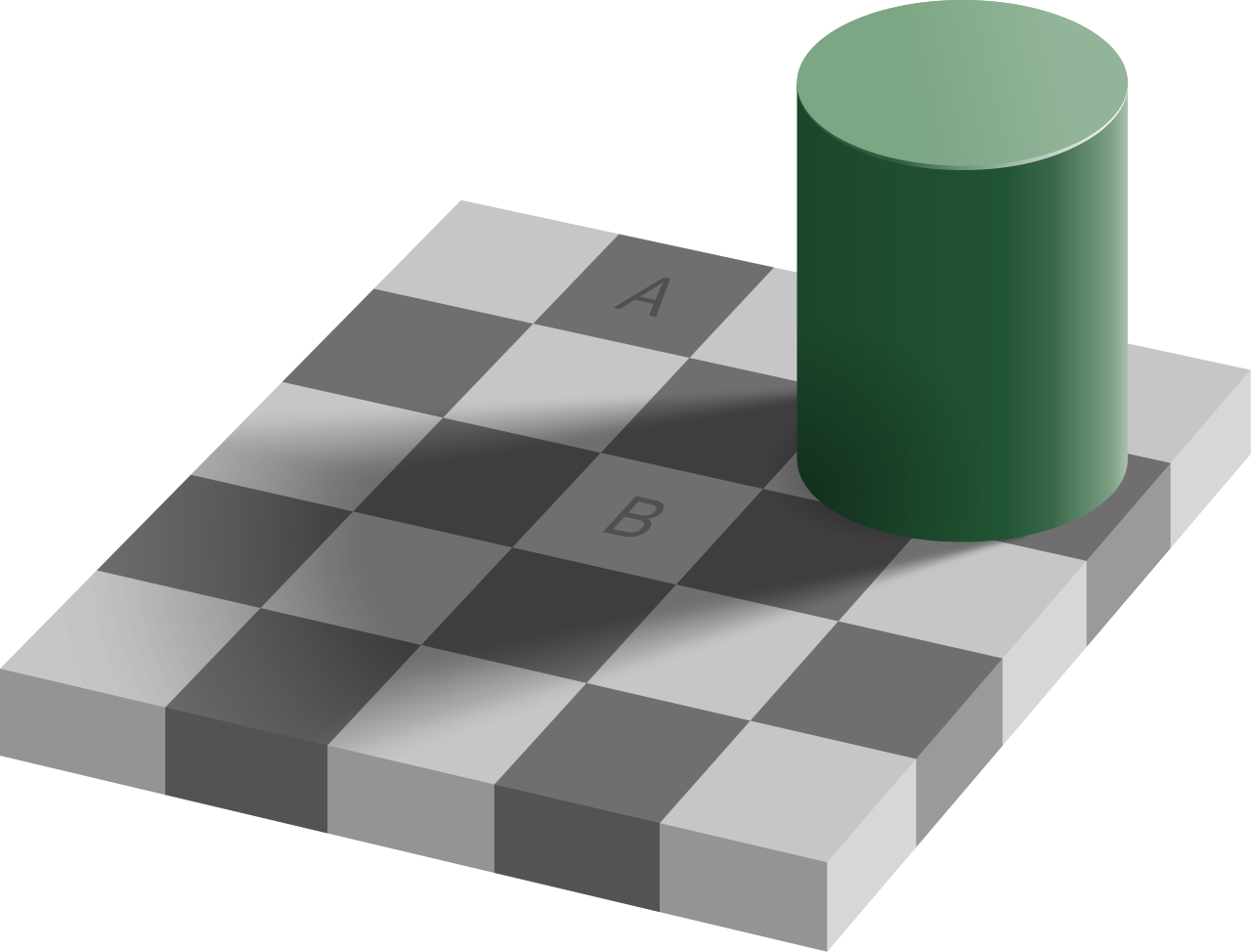

I was delighted to see yet another extremely informative webinar from Antivia. They partnered together with FedEx to deliver a compelling webinar that discusses how organizations can use dashboards to simplify the consumption of key corporate information. Donald MacCormick kicked off the webinar with a reminder that we don’t always see what we think we […]

BusinessObjects XI 3.1 SP6 full build available…

I was reviewing my SAP Support Portal Newsletter after returning from vacation and discovered that SAP has released SAP BusinessObjects Enterprise XI 3.1 Service Pack 06 – Full Build. I really wasn’t expecting that — but I was happy to see it. It sure beats worrying about the correct ‘upgrade path’ to such as the complications associated […]

Using Explorer and Lumira with SAP BW

This is a quick post to let you know that there is an excellent whitepaper available which explains everything you need to know about leveraging Explorer and Visual Intelligence with SAP BW. Organizations must leverage some type of acceleration technology – either HANA or BWA. Here is the original article: http://www.saphana.com/docs/DOC-2943 Here is a link […]

Building a Predictive Model

Last year SAP launched a new predictive solution for the BusinessObjects Business Intelligence Platform. Prior to 2012, SAP had partnered with SPSS to provide predictive functionality; however once SPSS was acquired by IBM, it was time for SAP to develop their own solution. This gave birth to Predictive Analysis. In version 1.0, Predictive Analysis was […]